Summary

This work was led during a gap year between my 2nd and 3rd years of engineering studies, and took the form of a Research Aide contract in remote from France with the team developing DeepHyper, at the Argonne National Laboratory (ANL), United STates, under the supervision of Prasanna Balaprakash and Romain Egele.

The main goal was to contribute to the improvement of the scalability of DeepHyper, by developing benchmarks, testing modules, algorithms, and conducing eperiments with the package. The provided PDF is only a short report, you can find more details below.

Contribution to the Package

DeepHyper is a scalable package for Automated Machine Learning, especially developing Black-Box Optimization (BBO) and Neural Architecture Search (NAS) methods that can scale to thousands of parallel workers on supercomputers.

Documentation & Tutorial

My first tasks to start getting my hands on it were about refactoring the official documentaion of the package and creating some of the tutorials that are provided there.

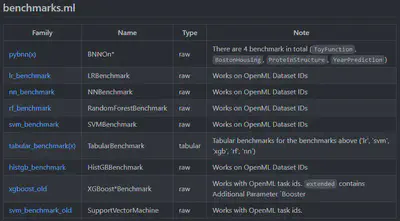

Benchmarks

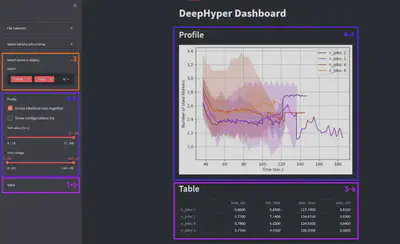

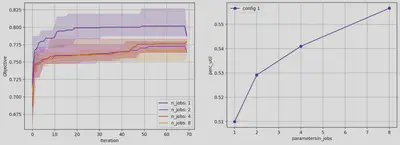

Analytics

perc_util) can be graphed as a function of n_jobs.

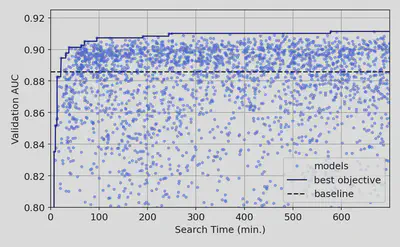

Application to the Fusion Problem

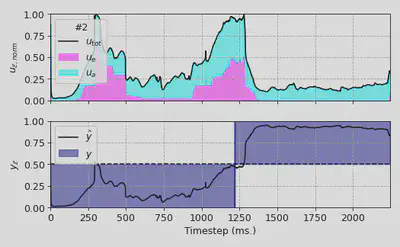

Another team at ANL was working on building a detector to predict dirsuptions (instabilities) in tokamak fusion plasmas in real time before those happen1. They were developing a Reccurent Deep Neural Network to process the sequential data from the different currents, fields, and temperature sensors, and output an alarm level signal that, when passing a certain threshold, would stop the reaction before the disruption happens ; they were looking for optimizing the hyperparameters of their model.

Contribution

I entirely refectored the code to make the training loop self-contained (in order for it to be compatible with BBO), as well as simpler and faster by using as much Tensorflow functions as possible. I also improved by a factor of 4 the speed of the dataloader which was initially shifting the entire array of data inputs in order to feed one batch ; I instead implemented a reading head cycling on it, and adapted the insertion of new inputs to this cycling setup.

Uncertainty Quantification

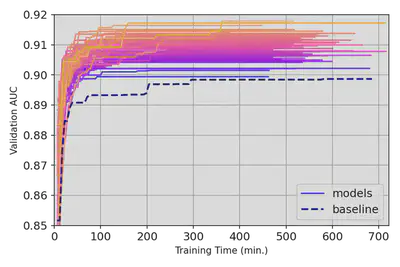

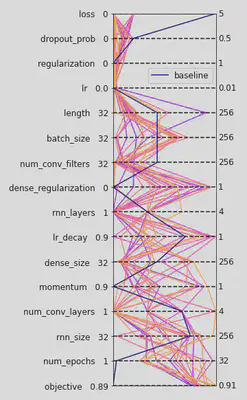

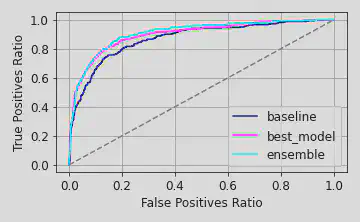

Results

Kates-Harbeck, J., Svyatkovskiy, A. & Tang, W. Predicting disruptive instabilities in controlled fusion plasmas through deep learning. Nature 568, 526–531 (2019). https://doi.org/10.1038/s41586-019-1116-4 ↩︎